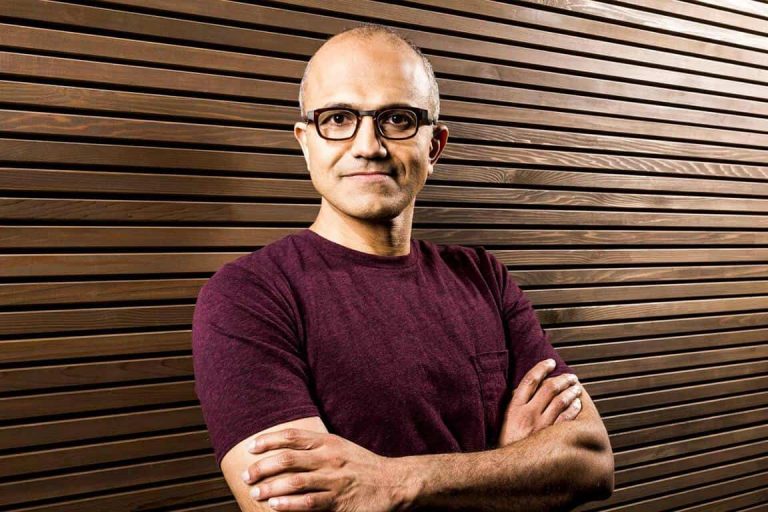

Microsoft is making significant investments in machine learning, bots, and artificial intelligence in general. Indeed, Build 2016 was full of A.I., and the company clearly sees the technology as vital to its “cloud-first, mobile-first” productivity strategy going forward. In an editorial published on Slate, Microsoft CEO Satya Nadella explained why the company sees A.I. as so important.

Nadella goes into the topic in some detail, focusing on how A.I. and human intelligence can work together to accomplish goals. He also calls for collaboration on the development of A.I.

Advanced machine learning, also known as artificial intelligence or just A.I., holds far greater promise than unsettling headlines about computers beating humans at games like Jeopardy!, chess, checkers, and Go. Ultimately, humans and machines will work together—not against one another. Computers may win at games, but imagine what’s possible when human and machine work together to solve society’s greatest challenges like beating disease, ignorance, and poverty.

Doing so, however, requires a bold and ambitious approach that goes beyond anything that can be achieved through incremental improvements to current technology. Now is the time for greater coordination and collaboration on A.I.

Nadella cites a number of examples, such as real world applications like augmented reality and fictional accounts like 2001: A Space Odyssey with its rogue HAL 9000 computer, in describing how A.I. is perceived as both a boon and a potential threat. Rather than focusing on this potential conflict, Nadella suggest that we look instead at the values that A.I.’s human designers hold.

I would argue that perhaps the most productive debate we can have isn’t one of good versus evil: The debate should be about the values instilled in the people and institutions creating this technology. In his book Machines of Loving Grace, John Markoff writes, “The best way to answer the hard questions about control in a world full of smart machines is by understanding the values of those who are actually building these systems.” It’s an intriguing question, and one that our industry must discuss and answer together.

Rather than fret about the future. Nadella wants the discussion to center around a conscious approach to implementing A.I. He sees the technology as the next platform beyond the operating system and the applications that run on top of it. For Nadella, A.I. will extend these layers to help technology learn about and interact with the physical world–all with the goal of improving human productivity and communications.

A.I. will represent as large a change in technology as the Internet has represented, according to Nadella, and its impact will require shared principles and values to guide its development:

What that platform, or third run time, will look like is being built today. I remember reading Bill Gates’ “Internet Tidal Wave” memo in the spring of 1995. In it, he foresaw the internet’s impact on connectivity, hardware, software development, and commerce. More than 20 years later we are looking ahead to a new tidal wave—an A.I. tidal wave. So what are the universal design principles and values that should guide our thinking, design, and development?

Nadella outlines six key talking points for A.I.:

A.I. must be designed to assist humanity: As we build more autonomous machines, we need to respect human autonomy. Collaborative robots, or co-bots, should do dangerous work like mining, thus creating a safety net and safeguards for human workers.

A.I. must be transparent: We should be aware of how the technology works and what its rules are. We want not just intelligent machines but intelligible machines. Not artificial intelligence but symbiotic intelligence. The tech will know things about humans, but the humans must know about the machines. People should have an understanding of how the technology sees and analyzes the world. Ethics and design go hand in hand.

A.I. must maximize efficiencies without destroying the dignity of people: It should preserve cultural commitments, empowering diversity. We need broader, deeper, and more diverse engagement of populations in the design of these systems. The tech industry should not dictate the values and virtues of this future.

A.I. must be designed for intelligent privacy—sophisticated protections that secure personal and group information in ways that earn trust.

A.I. must have algorithmic accountability so that humans can undo unintended harm. We must design these technologies for the expected and the unexpected.

A.I. must guard against bias, ensuring proper, and representative research so that the wrong heuristics cannot be used to discriminate.

At the same time, Nadella has a few requirements for us humans in considering how technology will impact our own development. He focuses on empathy, education, creativity, judgment, and accountability as key human traits that will be necessary to ensure that A.I. develops into a tool that has a positive impact on human life.

It’s a fascinating essay and well-deserving of your time if you are interested in looking into the future and predicting how Microsoft might work to develop artificial intelligence and integrate the technology into the company’s own products. Once you’ve read the piece, let us know in the comments what you think about Nadella’s vision of the future of A.I.